What is the problem?

Social networks have seen a surge in military content — including posts that are illustrated with images meant to stoke emotions. However, not all these "photos" are real – an increasing number are created with the help of artificial intelligence (AI).

An example of an AI-generated image on TikTok.

A recent example that went viral on Meta platforms is an image purporting to show two cats trapped in the rubble after the Russian shelling of Ukrainian cities. Some users understood this was artificially created content, but some believed it was authentic, out of sympathy for how animals suffer from war.

An example of an AI-generated image in a Facebook post.

There have already been scandalous cases of AI-generated images circulating in the Ukrainian info space. One unfortunately common theme is misusing such content, as happened with the UNIAN news agency. The agency labeled the AI-generated content as real photos, after which some other media outlets reposted the news with the same labeling, misleading readers.

AI-generated images that users spread, implying that they showed real scenes from Ukraine.

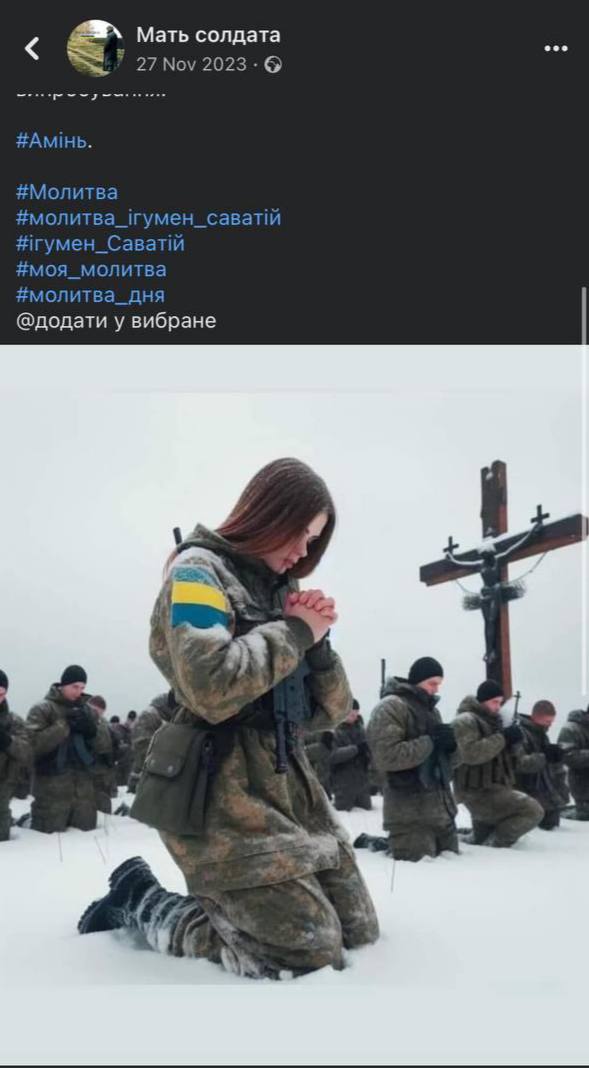

It can be difficult for the average users who is inexperienced in distinguishing between authentic and generated images, because they often look quite photorealistic – for example, as in this post, which seems to depict Ukrainian military personnel.

Looking closely at the photo reveals many obvious inconsistencies — the faces of the soldiers behind are blurred; the flag on the girl's hand consists of three colors, not the normal two; the girl's hands are anatomically incorrect – she has no left thumb, the military's camouflage looks like Russian, not Ukrainian, and bears no distinguishing Ukrainian insignias.

Another example is a post with a picture claiming to show a baby who survived a shelling in the city of Dnipro. The photo was spread to a large audience and prompted a flurry of emotional comments

However, despite the obvious clues hinting at AI, the image itself looks somewhat blurry, retouched, and almost painted – which, to the trained eye, makes it obvious that it is not an authentic photo.

Tetiana Avdeyeva, a lawyer and expert in artificial intelligence and media literacy at the Digital Security Laboratory, comments that such images overload people's social feeds with sensitive information that strongly affect one's psychological state. As a result, the information environment becomes negative, with discussion based not on real events, but on the basis of generated content — artificially creating a negative information field.

Often, users do not see a problem in using AI-generated emotional images as opposed to real ones, claiming it is acceptable to use such pictures that are illustrative and attempting to reflect reality, even if they are not photographs taken from reality.

What is the solution?

Simply prohibiting the use of artificial intelligence by an ordinary user is not a feasible option. Instead, it is much more practical to educate social media users about their own responsibility for their digital footprint.

Your digital footprint is essentially all the data you've ever left online. Whether it's a comment on a post you wrote in 2015, an ad you clicked on while browsing, or an image you shared from someone you know. This information does not disappear without a trace. The phrase "what goes on the Internet, stays on the Internet" illustrates the principle of the network — all actions in the digital world are stored, and can have consequences. That's why you should be aware of what you post, view, or comment.

Avdeyeva says it is worth remembering that regardless of who the subject is, certain types of content are prohibited from being distributed by everyone — hate speech, war propaganda, and some distribution of pre-election campaigning that are in violation of local rules. A person can be held legally responsible for this on an individual level.

Tetiana Avdeyeva. Photo from the speaker's personal archive

Secondly, it is wrong to think that if you do not have a large enough audience on social networks, then your voice is invisible. The work of algorithms and trends of online platforms are focused on ensuring that every voice can be heard. For example, on Twitter, a post from an account with even 20 followers can go viral, thanks to reposts.

"On the one hand, it's cool. For example, in authoritarian states, where the government tries to persecute activists, journalists, and human rights defenders and do not allow them to express themselves, such a function helps them reach the audience with high-quality, truthful information," says the specialist.

Your digital voice goes far beyond your page, and multiple voices coming together have a powerful impact on shaping the information space.

How does it work?

Is content generated by artificial intelligence always negative?

Avdeyeva says that sometimes, using images generated by a neural network is supported by a good motivation — to protect privacy.

"Many real images contain personal data – these images are not always pleasant, and the person depicted does not always want to be on the front pages. Therefore, for example, the media can use this principle. This is if what we are talking about has a bona fide motivation to protect personal data," says the expert, adding that if you weigh the benefits and harms of such a decision, the harm from the consequences will prevail – so you need to be especially careful with this.

Artificial intelligence itself is also not completely a product of hell: it helps automate processes and bring to life things we could only dream about. For example, the character Gollum from the film The Hobbit was artificially generated by a computer system: the actor was dressed in a suit with many dots, and computer-generated images were overlaid on them.

There are many options for using AI for something good. The question is about having regulations on where the red lines lie, and standards for what can and cannot be done.

Consequences of using AI-generated images

When we get used to emotionally charged images, there is a risk that ordinary pictures of reality will no longer impress anyone — people will not find them as revealing as generated ones.

If we talk about legal consequences both for an individual and for the media, this is a possible loss of trust.

"When you start generating content without warning or generating content on sensitive topics, it helps to create a bubble of mistrust in the media, and in the long term, it's quite dangerous. This greatly undermines the attempt to create an image of media reliability," says the expert.

She adds that the consequence of such actions may be that audiences will perceive the media as an unreliable source of information. On this basis, it will be more difficult for honest media to work, as it will be necessary to prove that their information is true every time.

There is also a risk that your generated image on a sensitive topic, even which you have labeled as "AI-generated," will be shared by other users without this disclaimer. At some point, people will not know this is artificially generated content but will distribute it, believing it to be authentic.

How Russian propaganda uses user posts to spread anti-Ukrainian narratives

Avdeyeva explains that the worst thing is when posts with neural network-generated images are used by Russian propaganda: "Russians are starting to say that Ukrainians are deliberately creating false content to spread disinformation about Russian actions in Ukraine. Later, based on this, they will claim that any photo and information is fake. I think everyone remembers when the Russians tried to massively share the idea that the photos from Mariupol are fake."

One of Russian media outlets, insinuating that the video from Bucha was fake.

Such narratives influence foreigners and form a prejudiced opinion about the Russian-Ukrainian war. In particular, the Russians spread the manipulation among foreigners that the terrible photos of the murdered Ukrainians from Bucha and Irpin were "staged by the Kyiv regime for the Western media," which really influenced some foreign users, who started to look at photos from Ukraine with general distrust.

"The more we create generated content related to the war, the more the narrative about pictures being fake will spread. If there is already AI-generated content by Ukrainians, then why wouldn't real photos of war crimes also be generated? I would not advise the media to generate anything on sensitive topics because it will further blur the concept of what is real and what is unreal," comments the expert.

The lawyer shares that on this topic, she and her team cooperate with international media and intergovernmental organizations to constantly refute narratives and prove that Ukrainian media are unbiased, adhere to standards, are reliable, and cover information as it should be.

"If Ukrainian media distribute AI-generated content, it will be increasingly difficult to interact with it, it will be more difficult to promote the idea that you can take information from the Ukrainian media, and not rely only on the resources of your own international investigators or reporters," she adds.

Is it ethical to use AI-generated images?

Neural networks are trained to generate images based on content previously created by humans. Because of this, the discussion in the information space lingers on whether it is ethical to use artificial intelligence when it uses authors' works without paying them and without their consent.

The question is open and complex. It is not even about the use of images and photos, as such, but about how the system is programmed. For example, when we ask to generate something, it is not known based on which specific input data and pieces a particular image is generated. We can ask to create a castle with unicorns, and we do not know based on whose photo of the castle the image will be generated.

It is not only an issue of ethics but also of compliance with copyright law, according to which we need the author's permission to use their work for informational purposes.

Regulation mechanisms

Existing regulatory mechanisms are designed for the work of online platform developers. For example, the Council of Europe is developing a project on artificial intelligence, in which the regulatory solution provides for content labeling but by end users, which partially removes this obligation from companies. The project says companies should provide transparency so that users understand that they are interacting with artificial intelligence, that the product's author is artificial intelligence, and provide users with labeling tools.

Companies like Facebook, Twitter, Google, and Microsoft are already introducing labeling, but it is still at the beta testing level. For example, Microsoft offers the function of overlaying an analog of an electronic digital signature on each authentic content. If public figures issue statements, then at the technical level, there will not be some visually visible watermark but, in fact, part of the source code of the uploaded video. It will also be possible to check whether this code matches the publicly available key. Generated content would not have this signature of originality. The expert also offers another option — to flag posts like a political ad or a paid post. However, all these solutions are aimed at bona fide authors.

How does it work in Ukraine?

In Ukraine, from a legal point of view, from the point of view of ethics and media law, there are still no answers on how to qualify generated content and whether it can be considered trustworthy and true. Avdeyeva believes that, despite this, Ukraine is moving toward regulation. For example, as one of the steps, the digital ministry gave recommendations on the responsibility of using artificial intelligence in the media.

Ukraine has plans to implement the Digital Services Act, which aims to ensure content regulation and transparency on online platforms that set content requirements. Even though they are quite general and basic, this solution introduces the function of the institution of trusted partners. Currently, Meta already has some partners: Cyfrolab, Internews Ukraine, and others. These are organizations with which platforms work closely to improve moderation policies and resolve controversial issues. For example, when the hashtags "#Bucha, #Irpin" were taken down, Cyfrolaba actively promoted that they stop blocking posts based only on a specific word and that these posts with evidence of war crimes be reinstated.

The expert believes that a solution will be offered, but it will take time, and for now, it is faster to monitor and regulate the generated content manually.

Tips for users on how to independently recognize generated content

Analyze strange changes in the image, and pay attention to:

- The way everything that is outside the oval of the face looks — earrings, jewelry, how the ears look in general.

Earrings in such photos are usually blurred. Photo: Kyle McDonald

- What does the background look like — is anything missing or blurred?

- Clothes — is the zipper properly positioned? Does the hoodie have ties?

- Hair — are the strands "broken," or does it look natural?

Photo: How to recognize fake AI-generated images, Kyle McDonald

- You should pay attention to how the pupils look — it can help you determine deep fakes, or overlaying an image on a video.

The expert gives an example: at the beginning of the full-scale invasion of Ukraine, unknown persons made a mask of Kyiv Mayor Vitalii Klitschko and called the mayors of other European cities: Barcelona, Berlin, and several others. The conversations lasted up to 30 minutes until it became clear that it was not Klitschko who was speaking because the narratives he spoke absolutely did not coincide with Kyiv's real position. Plus, filters applied in real-time usually do not have time to adapt to rapid changes in movement or mirroring of the image — they "lag." Therefore, it is possible to ask a person to turn their head if the video is broadcast in real-time or to analyze the finished video in special applications.

- The effect of a picture or blur on the image — do surfaces and faces look glossy, as if retouched?

The most important advice — if you doubt the truth of the information, do not spread it thoughtlessly. Follow the rules of media literacy, and don't litter with your digital footprint.

Newsletter

Digest of the most interesting news: just about the main thing