What is the problem?

AI allows people to create cute "AI cats," design avatars, or imagine what our cities might look like in a hundred years. However, this technology is also being used to deceive and manipulate.

For example, while scrolling through your Facebook feed, you might come across adorable "mammal dinosaurs." They don't look scary or bloodthirsty—instead, they have big, kind eyes and a sweet smile. They're endearing, prompting you to like or even share the post on your own page. Photo of "mammal dinosaurs." Photo from Ihor Rozkladai's Facebook

Photo of "mammal dinosaurs." Photo from Ihor Rozkladai's Facebook

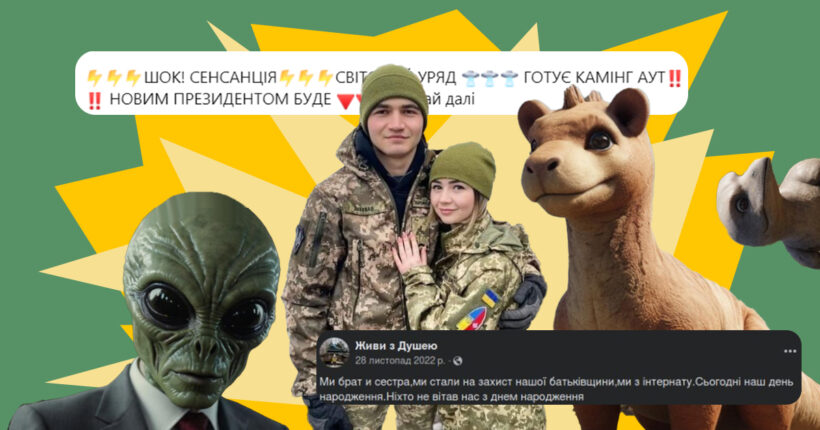

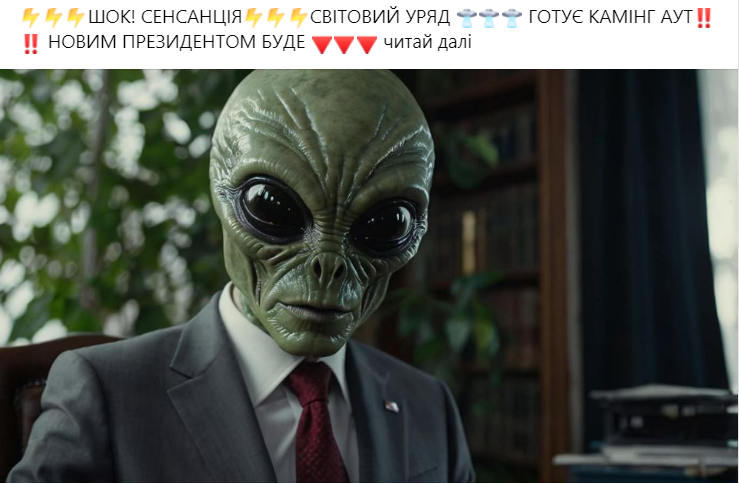

However, after a few days, the content of this seemingly innocent post can change. The photo might transform into an image of an alien or a reptilian figure representing a secret world government. A mysterious caption might appear, suggesting a change in power. And beneath it all, there might be your "like."

Photo of the content replacement (before and after the change). Photo from Ihor Rozkladai's Facebook

This demonstration was part of an experiment conducted by content moderation expert CEDEM Ihor Rozkladai on his Facebook page. The aim was to illustrate how social media users can be manipulated through AI-generated photos. Such manipulation isn't just a tool for harmless fun but can also be used for criminal activities.

"Here's how it works: You get 100+ likes for a cute 'mammal dinosaur' photo. With just a simple change, I can turn this post into something about conspiracy. By liking and sharing such posts, we inadvertently become amplifiers of misinformation," explains Ihor Rozkladai about his experiment's results.

This is just one example of how AI can be exploited. You could unknowingly be aiding scammers or spreading Russian propaganda. According to Rozkladai, such misleading content is increasingly appearing in feeds on platforms like Facebook, Instagram, and TikTok.

What is the solution?

To avoid liking or spreading fakes and manipulations generated by AI, it's crucial to understand the technology's capabilities. Today, it's possible to fake the face and voice of virtually anyone, meaning not every video featuring a military person, politician, or celebrity is authentic.

"In an era of rapid technological advancement, the key to staying safe is critical thinking and a basic understanding of how these technologies work. It's not about knowing every detail, but having a fundamental grasp of AI's capabilities. This should be part of our information hygiene," says Rozkladai.

Therefore, Rubryka, in collaboration with experts, explains how AI-generated content can be used maliciously and provides guidance on recognizing AI in videos, audio, and text.

How does it work?

AI-generated content is frequently exploited by Russian propaganda, using artificial intelligence to create deepfakes that replicate the faces and voices of prominent individuals. These fake videos are then used to propagate Russian narratives.

A notable example of deepfake technology was a video purportedly featuring Ukraine's President Volodymyr Zelensky at the start of the full-scale invasion. In the video, Zelensky allegedly urged Ukrainian troops to surrender and claimed he intended to flee the country. However, the video was poorly executed, making it evident that it was a fabrication.

In response to this false video, President Zelensky stated, "Regarding the recent childish provocation suggesting I called for surrender, I can only urge the military of the Russian Federation to lay down their arms and return home. We are here defending our land, our children, and our families. We will not lay down our arms until we achieve victory."

This video was among the first deepfakes targeting President Zelensky, but it was far from the last. Subsequent fake videos continued to misuse AI to spread misinformation and discredit the president.

One such video depicted Zelensky supposedly performing an oriental dance to Russian music in a disco bar setting. In the video, he is shown laughing and running around the venue in women's clothing, before dramatically shedding a red cape and dancing even more energetically.

How to recognize AI-generated content?

- Assess content and believability

Start by evaluating whether the video portrays famous individuals saying or doing something unusual or illogical. For example, if a video shows a leader making strange statements or engaging in implausible activities, this is a strong indicator that the content might be fake. Pay close attention if the content aligns with propaganda narratives.

- Check for audio and video defects

AI-generated sound may have unnatural frequencies, which can be challenging to detect without specialized software. However, one can observe lip sync—whether the movement of the lips matches the audio track.

Errors in AI-generated videos are often more apparent. Look for issues around the contours of the face, especially near the ears and cheeks, which may appear unnaturally blurred during head movements. Wrinkles and skin tone inconsistencies can also reveal artificial manipulation.

Attention to detail is crucial in identifying AI-generated content and protecting oneself from misinformation.

News with an AI trail

Artificial intelligence is not only used for creating deepfakes or manipulating photos and videos but also for generating fake news and promoting narratives on social media.

For instance, in May of this year, OpenAI, the company behind ChatGPT, identified five online entities using AI to manipulate public opinion globally. These entities, involving state structures and private companies from Russia, China, Iran, and Israel, utilized AI to generate social media posts, translate and edit articles, and craft headlines. The aim was to support political campaigns and sway public opinion on geopolitical conflicts. Notably, the Russian company Doppelganger used AI to post anti-Ukrainian comments on the social network X.

"I think it's quite challenging to spot AI-generated text. However, AI-generated content often includes errors, whether factual or stylistic, though not all AI-produced texts will necessarily exhibit these flaws," says Ihor Rozkladai.

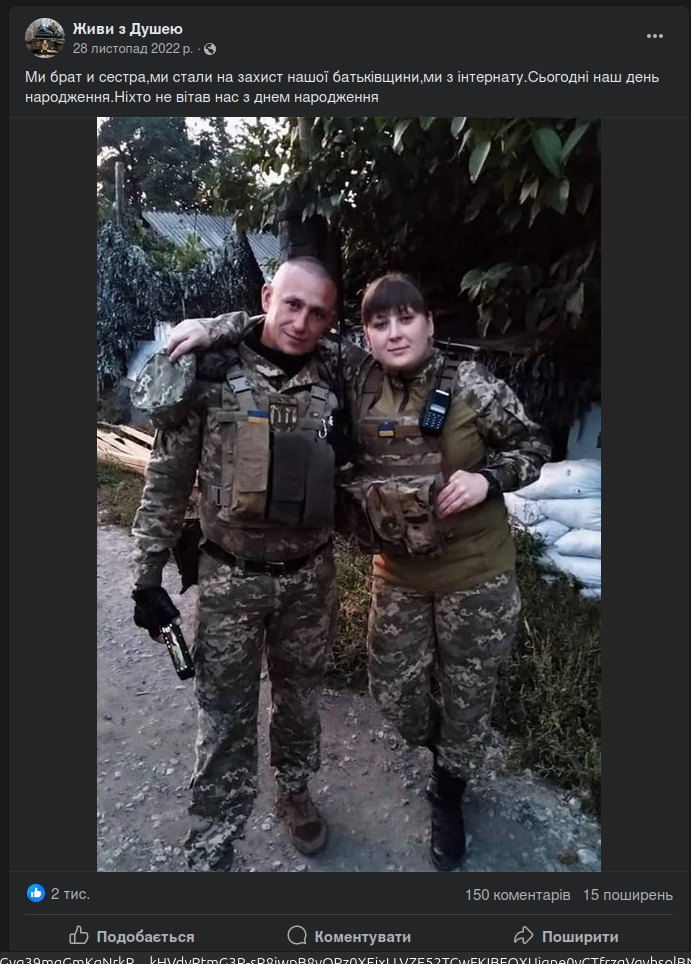

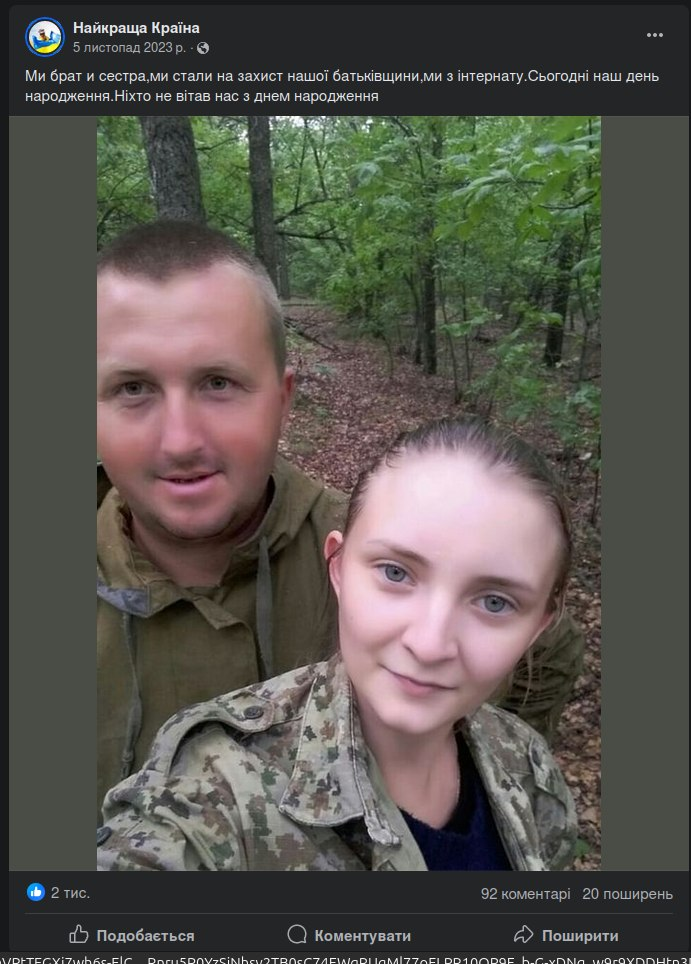

Another form of AI-generated malicious content involves posts designed to gain likes and boost page popularity. These often use emotional or sensational content to subtly influence your feed and integrate Russian propaganda. While posting an AI-generated picture of Patron the Dog for likes might seem harmless, there are increasingly frequent posts like, "A brother and sister from a boarding school came to defend our Motherland, but no one congratulated them on their birthday," which can carry more significant implications.

The "sad" story depicted in these posts is entirely fake. The faces of the Ukrainian soldiers in the images are generated by artificial intelligence. These AI-created faces are of high quality, making it challenging to identify the fakes. Many Ukrainians believed the fabricated story and shared these posts to encourage people to congratulate the Ukrainian service members.

However, by engaging with these posts, users were actually interacting with accounts, mostly registered in Armenia, that have no real connection to the Ukrainian Defense Forces. The reason for the Armenian accounts' involvement remains unclear, but it is often followed by posts nostalgic for the Soviet Union.

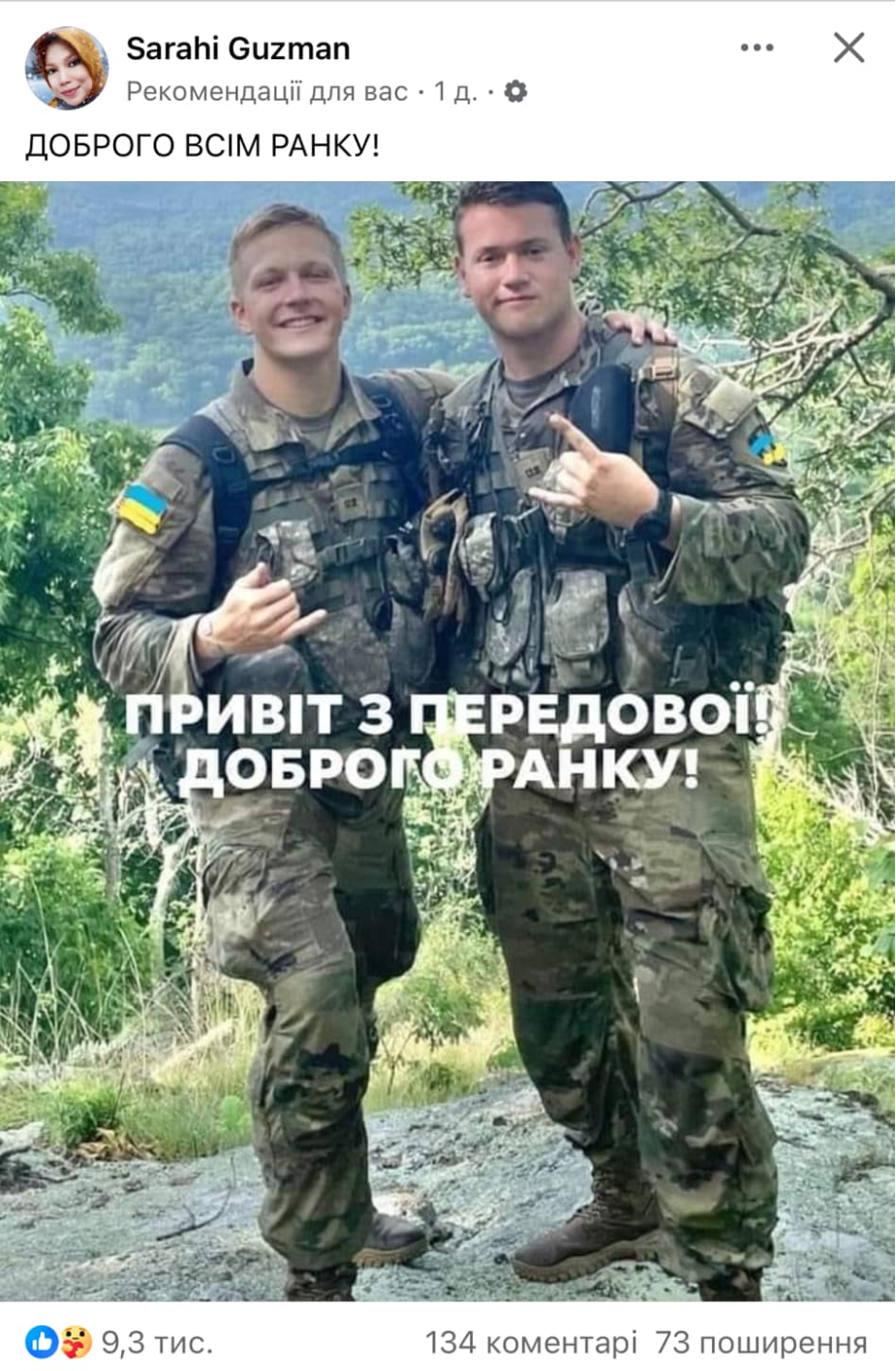

Similarly, fake posts featuring "sad Ukrainian soldiers" have been found from accounts registered in Indonesia. Here, the change is in the account's content rather than the feed; initial emotional posts are replaced by "betrayal" and links to pro-Russian channels.

You can find various examples of similar manipulations online. They generally follow a pattern: they first present sensitive and emotional content, often related to the Ukrainian military, and then, from the same or similar accounts, pro-Russian content appears in the feed. Thus, when encountering emotional content, take a moment before sharing it—there might be an attempt to deceive you.

Fraudsters also exploit artificial intelligence. For instance, video stories from allegedly Ukrainian TV channels were circulated on TikTok, featuring presenters discussing a fictitious law (No. 3386) that supposedly offered cash payments to Ukrainians who remained in the country after February 24, 2022. AI was used to mimic the voices of Ukrainian TV presenters.

According to the Center for Countering Disinformation of the National Security Council, these videos aimed to promote anonymous Telegram channels. The links to these channels were included in the video descriptions. Similar tactics can be used by fraudsters to redirect users to fake sites intended to steal personal information.

Additionally, AI can create videos where the faces of Ukrainian TV presenters or popular figures are stolen and used to endorse products, casinos, or other misleading content. Such manipulations are already appearing on social media. It's crucial to remember that not everything you see online is genuine.

When scrolling through your feed and encountering an emotional video or a purported message from Zelensky about surrender, stop and consider whether this could be fake AI-generated content. First, evaluate the content's believability: Would this person genuinely say that? Check if the voice sounds mechanical and examine details such as lip-sync accuracy, skin tone consistency, and image clarity around the cheeks and ears. If everything checks out, then you can engage with the post with confidence.

Newsletter

Digest of the most interesting news: just about the main thing